The term AGI is being trumpeted everywhere, but will every facet of human behavior really end up being replaced by AI? I remain skeptical.

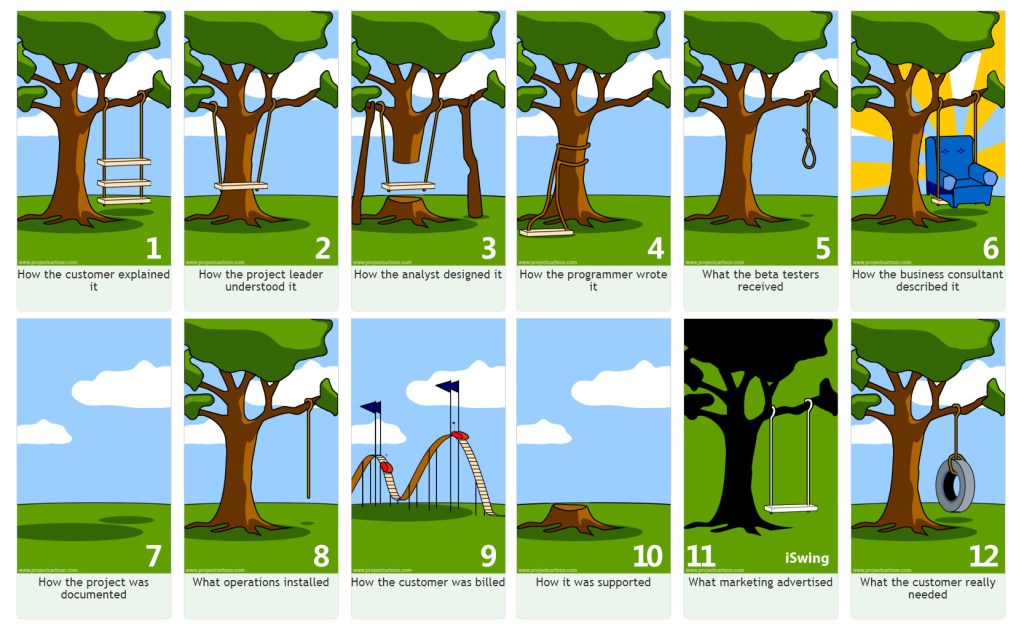

In software, for example, it already looks as though AI will soon be able to handle every stage of even the largest system projects. Yet I still doubt that an AI can build the system the human requester truly wants. Think of the famous “Tree Swing Cartoon.” It is so well known that it needs no explanation here. In that drawing, “How the customer explained it” corresponds to the requirements a human gives the AI, while “What the customer really needed” is the final outcome the AI is expected to deliver. I doubt that AI will be able to bridge the gap between the two.

In practice, AI will almost certainly outperform humans, so the intermediate phases of system development—programming, analysis, testing, and so on—will produce results superior to those of people. If AI also takes over the project-management role, fiascos as grotesque as those in the cartoon may vanish. But will “what the customer explained” and “what the customer really needed” truly converge just because AI is doing the development?

Human instructions and requirements given to AI will always be vague, riddled with errors and, at times, downright nonsensical. A future, highly capable AI might read our thoughts and emotions, infer our hidden needs, and deliver the optimal result. Yet will people be satisfied with a solution that anticipates their mistakes and unspoken wishes? Some will surely look at the “thing the customer really needed” produced by AI and complain that the AI is useless.

Humans are foolish creatures. We possess less knowledge than AI, are less contemplative, and our performance is easily shaken by emotion. Worse, ordinary people often harbor the arrogance of believing themselves exceptional. As long as we carry this folly, we will hesitate to depend entirely on AI.

Even so, there is no doubt that AI will replace much of what humans do. But so long as we remain foolish, we will still insist on doing things ourselves.